Robots.txt on Flywheel

A robots.txt file is a set of instructions that tell search engine bots how to crawl and index your site, and is typically stored in the site’s root directory. Site crawlers (also known as spiders or bots) are used by search engines to scan your site’s pages in order to help them appear in search results. The instructions from robots.txt help guide the crawlers to what is and what isn’t available to be crawled.

Sites on Flywheel have a default robots.txt file in place when first created. It contains the following lines:

User-agent: * Disallow: /calendar/action* Disallow: /events/action* Disallow: /cdn-cgi* Allow: /*.css Allow: /*.js Disallow: /*? Crawl-delay: 3

Note

The settings above are simply Flywheel defaults. Need to customize your site’srobots.txt? No problem! It can be quickly edited via SFTP.Note

The Yoast SEO plugin can change your robots.txt file if given permission which is easy to overlook during setup. Their configuration is lacking nearly all of the key features that are discussed below, and should be changed to our settings in order to better control bot traffic on your site. Refer to The robots.txt file in Yoast SEO for more information.So that’s all well and good, but what do those lines actually mean?

Good question! We’ll go through each line below.

User-Agent: *

User-Agent: * targets all bots which obey robots.txt. This says, “the following information is for all bots”, thanks to the asterisk. This can also be altered to target a specific user-agent of a robot that might be acting out of line. That said, in most cases it’s best to keep the asterisk/wildcard in place and create a second entry for the specific user-agent, so all the other bots won’t accidentally fall into the same trap.

Disallow: /calendar/action* Disallow: /events/action*

The second and third lines, Disallow: /calendar/action* and Disallow: /events/action*, prevent bots from accessing calendar and events-focused URLs which can inadvertently cause additional events to be created. The asterisk, much like the user-agent’s from the previous line, designates “literally anything”, which helps to write rules in short-hand.

Disallow: /cdn-cgi*

The fourth line, Disallow: /cdn-cgi* prevents bots from crawling the Cloudflare endpoint, which is the recommended best practice with their services.

Allow: /*.css Allow: /*.js

The fifth and sixth lines, Allow: /*.css and Allow: /*.js are extremely important. They are “exceptions” to the following line which disallows query strings. These lines allow any site-level asset needed to render the page (such as “main.css” or “dist.js”) to be read by Google, which is needed in order to allow sites to be scanned for mobile compatibility. Without it, Google will report incorrect stats about a site, likely saying that they’re not mobile-friendly, and we don’t want that!

Disallow: /*?

This line blocks bots from accessing anything on that site which has a question mark in the URL, such as search and filtering pages (e.g. ?search=”my-search-parameter”, or /?filter=ascending). For example, this would be used when you want to exclude all URLs that contain a dynamic parameter like Session ID to ensure the bots don’t crawl duplicate pages.

Crawl-delay: 3

This line forces bots to wait three seconds before crawling again. By default, this is “0” which means bots can crawl as often as they’d like, sometimes tens of times per second. Not all bots adhere to the crawl-delay directive, but it’s still good practice to throttle the ones that do.

Note

Looking for some more tips and tricks on configuring yourrobots.txt file?Moz has a great article here with all sorts of configurations and scenarios

Is a robots.txt file even necessary?

99% of the time, Yes.

Even if you don’t have a robots.txt file, search engine bots will still manage to find, crawl and index your site. However, what they decide to index and how frequently they crawl the site will be entirely up to their discretion!

This isn’t so much of a big deal on smaller sites. However, as your site grows, you’ll most likely want more control over how your site is crawled and indexed. Why? Search bots can only crawl a certain number of pages during a crawl session as they have specific page crawl quotas. If they don’t finish crawling your site in that session, they’ll come back around the next time to finish the job.

If you have a lot of content on your site, your site’s indexing rate might be affected. This is where the benefit of a robots.txt file comes into play, since you can prevent bots from crawling unnecessary pages and reaching their crawl quotas on plugin or theme files, or simply pages you no longer want found online.

Need help?

If you have any questions our Happiness Engineers are here to help!

Getting Started

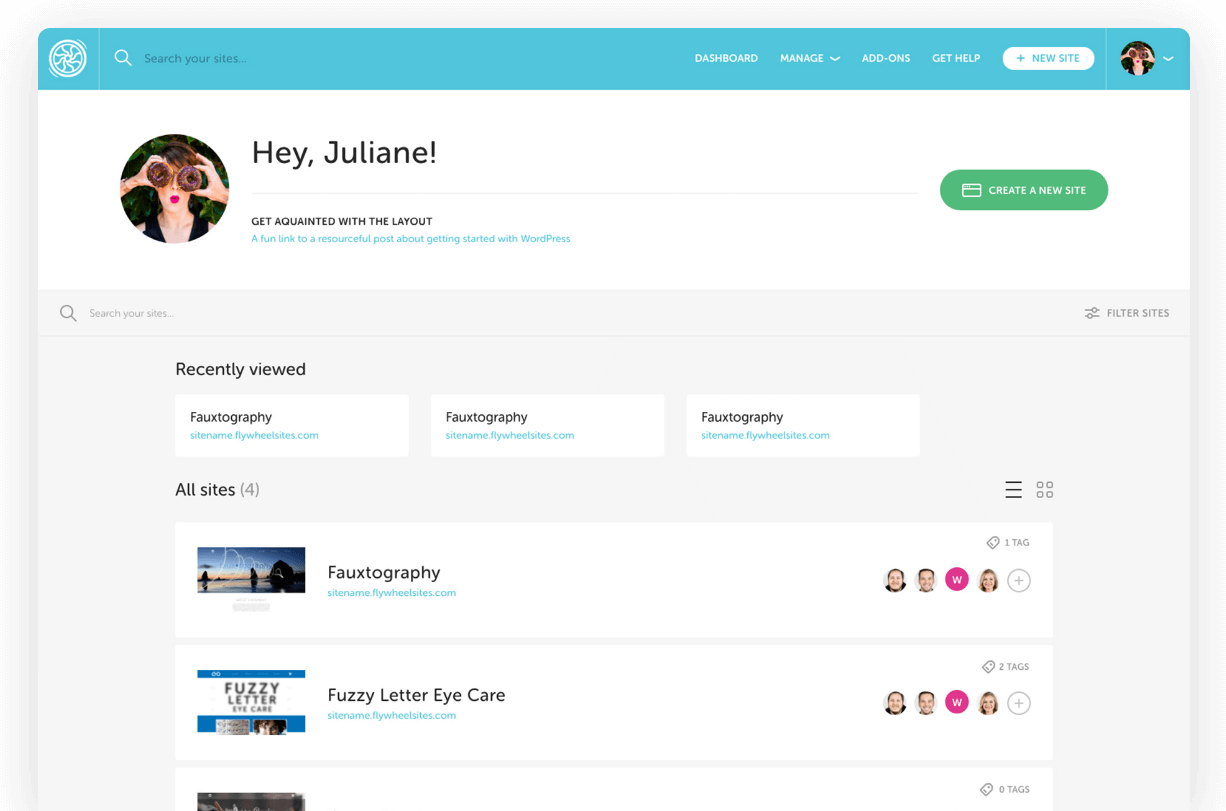

New to Flywheel? Start here, we've got all the information you'll need to get started and launch your first site!

View all

Account Management

Learn all about managing your Flywheel user account, Teams and integrations.

View all

Features

Flywheel hosting plans include a ton of great features. Learn about how to get a free SSL certificate, set up a staging site, and more!

View all

Platform Info

All the server and setting info you'll need to help you get the most out of your Flywheel hosting plan!

View all

Site Management

Tips and tricks for managing your sites on Flywheel, including going live, troubleshooting issues and migrating or cloning sites.

View all

Growth Suite

Learn more about Growth Suite, our all-in-one solution for freelancers and agencies to grow more quickly and predictably.

Getting started with Growth Suite

Growth Suite: What are invoice statuses?

Growth Suite: What do client emails look like?

Managed Plugin Updates

Learn more about Managed Plugin Updates, and how you can keep your sites up to date, and extra safe.

-

Restoring Plugin and Theme Management on Flywheel

-

Managed Plugin Updates: Database upgrades

-

Managed Plugin Updates: Pause plugin updates

Local

View the Local help docs

Looking for a logo?

We can help! Check out our Brand Resources page for links to all of our brand assets.

Brand Resources All help articles

All help articles